Hello people…! In this post I will talk about the Quick Sort Algorithm. This is an in-place sorting algorithm, which means it works on the given array itself and does not need any additional space, which means less overheads. But this is an unstable sorting algorithm, which means that the relative position of equal elements may not be maintained. So, when an unstable sorting is fine, quick sort is a very good and robust solution. So, lets’s get started with Quick Sort…!

Quick Sort is a Divide-and-Conquer Algorithm. What it means is, the given problem is divided into many sub-problems and we solve the sub-problems to get the solution of the actual problem. Here, we will partition the array into two and work our algorithm on the two arrays and then recursively repeat the same for other partitioned arrays. The process is –

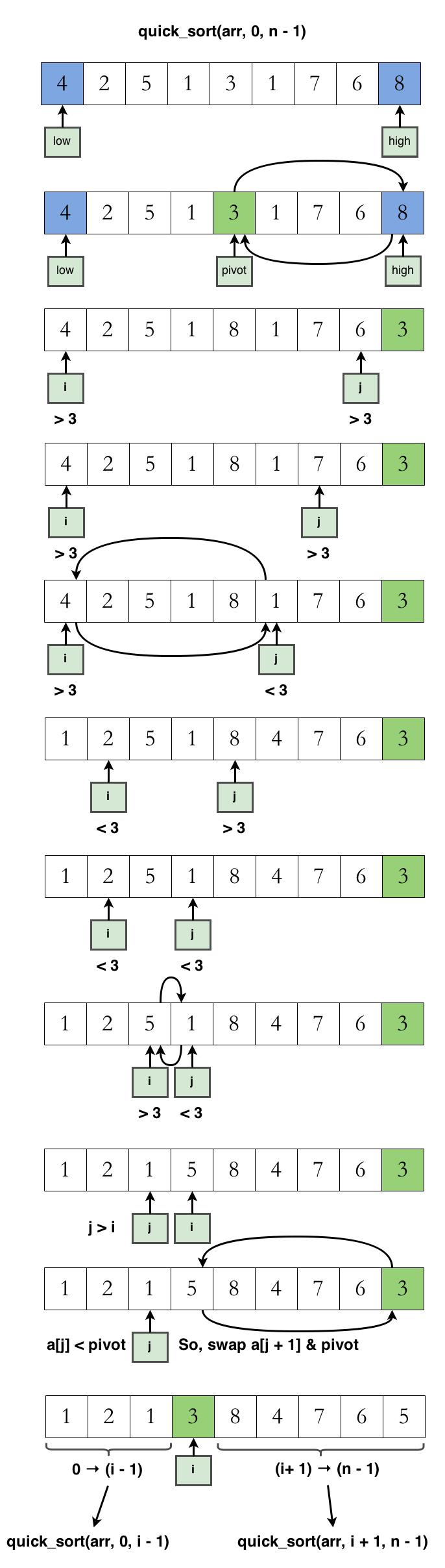

- Choose any element in the array. Make it what is called the pivot.

- Swap the pivot to the last element.

- Make a two way scan of the remaining elements.

- Swap elements such that all the elements less than pivot are to the left-end and all the elements greater than pivot are to the right end.

- Elements equal to the pivot can go to the either side.

- Swap the pivot to the appropriate position.

- Apply the same technique to the left sub-array and the right sub-array.

- This is done in a recursive manner and the base case is when our array is of size 0 or 1. Arrays of size 0 or 1 are considered to be sorted. So we do nothing in that case.

For a better understanding of the algorithm, look at the sketch below –

The technique of quick sort is rather weird but it is straight-forward. Go through the step-by-step process a few more times and try to code the quick sort algorithm. You need to be confident with recursion if you want to get this right. If you have succeeded, well done…! If not, don’t worry, look at my code below and try to figure out what are the small silly that things you have missed…!

Integer Overflow – It would like to point out one thing in the code above. In the line 19, we wrote, “int mid = low + ((high – low) / 2)”. One could have written “mid = (low + high) / 2” also, but there is a reason why we specifically write it in the former way. Suppose, the values of low is INT_MAX – 5 (maximum value of integer) and the value of high is INT_MAX. Upon adding them, we get a value that is greater than INT_MAX, thus a value greater than a 32-Bit integer can hold. So, what happens is that, the bits go from “1111111….” to “0000000….” that is they sort of “reset” themselves. This is similar to the Odometer in a car, when the odometer is maxed out, i.e., it has all 9’s then it resets itself to all 0’s. The same thing happens here too. There is a good depiction of this in Wikipedia. So, when we add such large values we actually get a negative value, to which we would be calculating the mean. And when you give a negative index to an array, it is most likely that your code will crash due to Segmentation Fault. Although this theory makes more sense when the indices of the arrays are 16-Bit integers, every programmer must keep this theory in the back of his mind.

The main concern about this post is not only to give you an idea about Quick Sort Algorithm, but we will discuss the complexity analysis and how to make quick sort faster. Quick Sort algorithm has the following complexities –

- Worst Case Time Complexity – O(n2)

- Average Case Time Complexity – O(n log2 n)

- Best Case Time Complexity – O(n log2 n)

- Space Complexity – O(n)

The O(n) space complexity of quick sort is due to the O(n) recursion calls that consume the stack. Although the complexities are vivid, the runtime of quick sort depends on a few factors –

- Choice of Pivot – The optimal choice of pivot in quick sort has troubled the researchers for quite some time. Generally, the pivot must be selected in such a way that it partitions the array into two-halves, or at the least, partitions the array into nearly equal halves. But we would know nothing about this when we partition. So, we are forced to pick a pivot of our choice. Sometimes, due to the pivot, we might have to do more partitions that we would expect. Consider the case of a sorted array. If we always choose the first element of the array, we would end up doing O(n) partitions. Robert Sedgewick, a computer scientist, suggested to take the median of first, middle and the last element as the pivot. When we implement this, with tail recursion, we can reduce the space complexity to O(log n), which is very less compared to O(n).

- Method of Partition – What we have seen till now is a 2-way partition. i.e., we partition the array into two and recursively implement our algorithm. We can do a 3-way partition which can reduce the best case time complexity to O(n). We will discuss this shortly.

Dual Pivot Partitioning – Until now, we did a 2-way partition, or we partitioned the array into 2 parts. Now, we will partition the array into 3 parts and recursively call solve for the partitioned sub-arrays. When we had to divide the array into two, we simply took an element and put all the elements left of it into one array and greater than it into another array. What should we do if we want to partition the array into 3 parts? If you think for a minute or two you can easily figure our the principle. We will simply, pick up 2 elements, say A and B, and partition our array into 3 parts such that –

- First part has all elements < A.

- Second part has all elements that is > A, and < B.

- Third Part has elements > B.

We can put this technique in a step-by-step procedure –

- We will choose the starting element, say array[low] and the last element, say array[high] as the pivots. Firstly, check if array[low] < array[high], if not, swap.

- Take two variables lt, ht, which store the indices and act as pointers. Set (lt ← low + 1), and (ht ← high – 1).

- Start iterating from (low + 1) → (high – 1).

- If array[i] is less than array[low], swap array[i] and array[lt] and increment i and lt.

- If array[i] is greater than array[high], swap array[i] and array[gt] and decrement gt only.

- If array[i] lies between array[high] and array[low], simply continue to the next iteration.

- Break out of this procedure, once i crosses gt. This is because when, i > gt, all elements of value array[i] will be greater than array[high], the partitioning guarantees this and they are at their proper place.

- Lastly, swap array[low] and array[lt – 1], and swap array[ht + 1] and array[high].

- Recursively apply the algorithm on the partitioned sub-arrays.

- Your base case if there is only one element in the array, which needs no further sorting.

It is a simple procedure. You just need to be careful about using the variables and the base case must be dealt appropriately. Try to code this technique. I’m sure you’ll get it. If you didn’t I have put my code below –

I hope you get the fundamental idea behind the partition. Now, the time complexity of this algorithm is much like the standard procedure, it is O(n log3n) which is slightly more efficient than the standard one which would be O(n log2n) and we know that for a given value of n, log3n is smaller than log2n. But this difference is not significant for small values of n. Some consider the 3-way partition to add more overheads to the standard procedure. You must choose the best technique that fits your demands.

Random Pivot – There is another optimization technique that is often implemented in quick sort. This is making the choice of the pivot random. Instead of picking the first, or last, or middle element as the pivot we pick a random element in the given range of numbers and partition the array as usual. Using a random number generator, we can pick a random index from a range of indices and partition the array accordingly. You can see it in the code below –

Dijkstra’s 3-way partitioning – Dijkstra’s 3-way partitioning is another approach to partition the array into 3 parts. If the pivot element is ‘P’ then Dijkstra’s 3-way partitioning divides the array into elements which are less than P (left partition), elements which are equal to P (middle partition) and elements which are greater than P (right partition). And since the middle partition is just a collection of equal items, it is considered to be sorted and we recursively call the quick sort on the left and the right partitions. This approach performs exceedingly better than the other implementations of the numbers keep repeating very frequently.

If you have any doubts feel free to comment them. Now, as we have learnt so many varieties of Quick Sort variants. Which one do you think is faster? I did a comparison of the Quick Sort variants for a Weekly Discussion on the blog’s FB page. And this was what I got –

As you can expect, the Dual-Pivot worked pretty faster than the rest due to the O(n log3 n) complexity of Dual Pivot partitioning. You might get a doubt, if dual partitioning gave an O(n log3 n) complexity, will an n-partitioning quick sort give O(n logn n) complexity which is O(n). Can we really achieve a linear time complexity algorithm for Quick Sort? Well, it is a nice question, but remember that, when we were doing a dual pivot partitioning, we had the two pivots in sorted order. Because it was only two elements we didn’t actually do any sorting there. So, if you wanted an n-pivot partitioning in Quick Sort, you can do that, but you’d have to have elements in the sorted order, which is nothing but sorting the elements itself. You see? O(n) complexity sounded awesome a few moments back, but now it sounds like nonsense because we know it wont happen!

I hope my post helped you in learning the Quick Sort algorithm and the principles behind it. If it did, let me know by commenting. Happy Coding…! 🙂

4 thoughts on “Quick Sort Algorithm”

You could have made it shorter 🙂 in the first C example code

while (j >= i) {

if (arr[j] pivot) {

temp = arr[i];

arr[i] = arr[j];

arr[j] = temp;

++i;

--j;

continue;

}

if(arr[i] = pivot) --j;

}

I prefer keeping it simple rather than short… But thanks anyway 🙂

This piece of code is awesome (Y)

Thank you 🙂